Memory Management

(SOURCE: http://stargazer.bridgeport.edu/sed/projects/cs503/Spring_2001/kode/os/memory.htm and Wikipedia)

Memory management is the act of managing computer memory. The essential requirement of memory management is to provide ways to dynamically allocate portions of memory to programs at their request, and freeing it for reuse when no longer needed. This is critical to the computer system.

Several methods have been devised that increase the effectiveness of memory management. Virtual memory systems separate the memory addresses used by a process from actual physical addresses, allowing separation of processes and increasing the effectively available amount of RAM using paging or swapping to secondary storage. The quality of the virtual memory manager can have an extensive effect on overall system performance.

Memory Manager

The purpose of the memory manager is

- to allocate primary memory space to processes

- to mao the process address space into the allocated portion of the primary memory

- to minimize access times using a cost-effective amount of primary memory

Memory Management Algorithms

In an environment that supports dynamic memory allocation, the memory manager must keep a record of the usage of each allocatable block of memory. This record could be kept by using almost any data structure that implements linked lists. An obvious implementation is to define a free list of block descriptors, with each descriport containing a pointer to the next descriptor, a pointer to the block, and the length of the block. The memory manager keeps a free list pointer and inserts entries into the list in some order conducive to its allocation strategy. A number of strategies are used to allocate space to the processes that are competing for memory.

Best Fit - The allocator places a process in the smallest block of unallocated memory in which it will fit.

Problems:

- It requires an expensive search of the entire free list to find the best hole.

- More importantly, it leads to the creation of lots of little holes that are not big enough to satisfy any requests. This situation is called fragmentation, and is a problem for all memory-management strategies, although it is particularly bad for best-fit.

Solution:

One way to avoid making little holes is to give the client a bigger block than it asked for. For example, we might round all requests up to the next larger multiple of 64 bytes. That doesn't make the fragmentation go away, it just hides it.

- Unusable space in the form of holes is called external fragmentation

- Unusable space in the form of holes is called external fragmentation

Worst Fit - The memory manager places process in the largest block of unallocated memory available. The ides is that this placement will create the largest hole after the allocations, thus increasing the possibility that, compared to best fit, another process can use the hole created as a result of external fragmentation.

First Fit - Another strategy is first fit, which simply scans the free list until a large enough hole is found. Despite the name, first-fit is generally better than best-fit because it leads to less fragmentation.

Problems:

- Small holes tend to accumulate near the beginning of the free list, making the memory allocator search farther and farther each time.

Solution:

- Next Fit

Next Fit - The first fit approach tends to fragment the blocks near the beginning of the list without considering blocks further down the list. Next fit is a variant of the first-fit strategy.The problem of small holes accumulating is solved with next fit algorithm, which starts each search where the last one left off, wrapping around to the beginning when the end of the list is reached (a form of one-way elevator)

Compaction

Compaction attacks the problem of fragmentation by moving all the allocated blocks to one end of memory, thus combining all the holes. Aside from the obvious cost of all that copying, there is an important limitation to compaction: Any pointers to a block need to be updated when the block is moved. Unless it is possible to find all such pointers, compaction is not possible. Pointers can stored in the allocated blocks themselves as well as other places in the client of the memory manager. In some situations, pointers can point not only to the start of blocks but also into their bodies. For example, if a block contains executable code, a branch instruction might be a pointer to another location in the same block. Compaction is performed in three phases. First, the new location of each block is calculated to determine the distance the block will be moved. Then each pointer is updated by adding to it the amount that the block it is pointing (in)to will be moved. Finally, the data is actually moved. There are various clever tricks possible to combine these operations.

Virtual Memory

Virtual memory strategies allow a process to use the CPU when only part of its address space is loaded in the primary. In this process, each process's address space is partitioned into parts that can be loaded into primary memory when they are needed and written back to secondary memory otherwise.

Virtual Memory

- support programs that are larger than physical memory

- allow several programs to reside in physical memory at a time (or atleast the currently relevant portions of them) to support multiprogramming.

Virtual Addressing

The essence of virtual memory systems is in developing efficient techniues for dynamic loading- binding virtual addresses to physical addreses at runtime. In virtual memory systems, the size of the virtual address space is greater than the size of the physical address space allocated to the process; that is, the process uses more virtual than physical addreses.

- Physical Addressing leads to memory fragmentation problems.

- A solution is Virtual Addressing.

>Each process appears to have its own private memory that starts at

address 0. Processes are protected from each other.

>Process can be moved in memory by changing its base address

Managing Virtual Memory

Normally, blocks of information are taken from the disk and placed in the memory of the processor. The most common ways of determining the sizes of the blocks to be moved into and out of memory are:

- Swapping

- Paging

- Segmentation

Swapping

If a disk is available, we can also swap blocked jobs out to disk. Swapping out a job involves copying all memory containing data (and program code if it has changed) onto the disk, and releasing the main memory that it was using. The process is then suspended until there is an opportunity to swap it back in again. All data and program code is restored from the copy on disk, allowing the process to continue running in main memory from the point before it was swapped out.

When a job finishes, we first swap back jobs from disk before allowing new jobs to start. When a job is blocked (either because it wants to do I/O or because our short-term scheduling algorithm says to switch to another job), we have a choice of leaving it in memory or swapping it out. One way of looking at this scheme is that it increases the multiprogramming level (the number of jobs ``in memory'') at the cost of making it (much) more expensive to switch jobs.

A variant of the MLFQ (multi-level feedback queues) selection algorithm is particularly attractive for this situation. The queues are numbered from 0 up to some maximum. When a job becomes ready, it enters queue zero. The CPU scheduler always runs a job from the lowest-numbered non-empty queue (i.e., the priority is the negative of the queue number). It runs a job from queue i for a maximum of i quanta. If the job does not block or complete within that time limit, it is added to the next higher queue. Jobs with short quanta in that short bursts get high priority, but does not incur the overhead of frequent swaps between jobs with long bursts.

Paging

- Paging allocates memory in fixed-size chunks called pages. A bounds register is not needed because all pages are the same size; if you need less than a page the rest is wasted (internal fragmentation).

- Each process has a page table that maps from the virtual page to physical page

- Page table can be large. Assume 32-bit address space, 4KB page, 4 bytes per page table entry. How big is the page table for each process?

- Say you have a PM(Physical memory) of size 16K.

You can support a large number of programs each of size say 1M.

Programs are provided with a virtual address space (1M).

OS takes care of fetching the location (from PM or from disk).

eg. load R, [20K] - This is a virtual address

programs thus work with virtual addresses. It is the OS' job to find out where it is and get the value. This is done by a mechanism called paging.

Divide the entire virtual address space into units called pages. eg. for the 1 MB, VAS, you have 256 virtual pages (0 ... 255)

Divide the physical memory into pages. eg. for the 16K physical memory, you have 4 pages (0 .. 3)

At any time some (atmost 4) of the virtual pages could be in one of the physical pages. The rest are on the disk in what is called the swap space.

A page is also the unit of transfer between the disk (swap space) and physical memory.

You keep track of which virtual page is in physical memory (if so where), and which is not.

This information is maintained in a page table. PT thus performs the mapping from VA(Virtual Address) to PA (Physical Address).

page table (indexed by virtual address) entry =

Note that this has to be done on every reference (both data and code), which can turn out to be very costly to do by software.

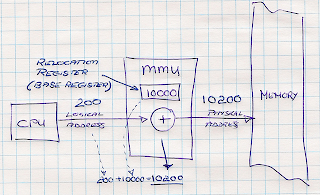

Usually this is done by a hardware unit called the MMU (which sits between the CPU and the cache).

- Steps performed by the MMU:

>given a VA, index into the PT(Page Table)

>check if present, if so, put out phys address on the bus and let the memory operation be performed

>what do you do if absent?

-you have to go to disk and get it.

-We do not want to do this by hardware.

-Software should gain control at this point (access control).

-Hardware raises a page fault.

-This fault is caught by the OS, the corresponding page is brought in from the disk into a physical page, the PT entry is updated. In the process, a victim may need to be thrown out and its entry invalidated.

Multiple processes can be handled by either switching the address mappings at context switch times, or by simply extending the VAS with a few more bits (process id).

- What does a Page Table entry contain:

>physical pg #,

>present/absent bit,

>protection (read/write/execute),

>modified - on a write this is set. Will be useful when the page has to be replaced to find out if it has to be written to disk.

>referenced - set each time you read/write to the page. (will be used later)

>caching disabled - says do not cache it. Useful for I/O devices that are memory mapped.

- Paging Issues:

>Page tables are Large:

where is the PT itself stored? Well, if it is very small (a few entries), then you could just keep it in a few registers (or on chip somewhere). But typically they are large. For instance, 32 bit address space, and 4K page size => 1M pages 64 bit address spaces => ? We don't have that much memory. So what do we do? Usually a process does not access all of its address space at once. Exploit this locality factor.

Solution: multi-level page tables (like paging the page tables themselves). eg. 2-level PT, 32 bit VA = [10, 10, 12]. if a program is using some code, a little data and stack, then maybe he needs only the first, second and last entries of the first level PT.

>Mapping Must be Fast:

Translation is done on every memory reference (instruction fetch,load/store)

So it has to be fast.

The above mechanisms take atleast 1 additional memory reference (PT lookup) And for multi-level PTs this is even worse.

Make use of the locality in typical executions:

most programs tend to make a large no of references to a small no of pages

there is both temporal and spatial locality with respect to what you are likely to access in the future relative to what you accessed in the past.

Solution:

Transalation Lookaside Buffer

No comments:

Post a Comment